Key Takeaways: Understand the distinction between AI inference and training, explore fine-tuning techniques for large language models (LLMs), and discover the best practices for deploying AI models in production. This guide offers a roadmap for learning and advancing your career in the rapidly evolving AI landscape.

Introduction

The artificial intelligence (AI) landscape has dramatically shifted in recent years, moving the focus from training robust models to deploying them for inference. As the demand for AI applications grows, so too does the need for professionals skilled in AI model deployment and inference. This blog post will delve into the critical concepts and methodologies surrounding AI inference, highlight practical applications, and provide actionable advice for your career development in this niche.

Technical Background and Context

AI inference refers to the process of utilizing a trained AI model to make predictions based on new data. Unlike AI training, which involves the iterative process of adjusting model parameters using historical data, inference is about deploying these models in real-world applications. This shift is largely driven by the increasing demand for quick, accurate predictions in enterprise settings.

📚 Recommended Digital Learning Resources

Take your skills to the next level with these curated digital products:

Academic Calculators Bundle: GPA, Scientific, Fraction & More

Academic Calculators Bundle: GPA, Scientific, Fraction & More

ACT Test (American College Testing) Prep Flashcards Bundle: Vocabulary, Math, Grammar, and Science

ACT Test (American College Testing) Prep Flashcards Bundle: Vocabulary, Math, Grammar, and Science

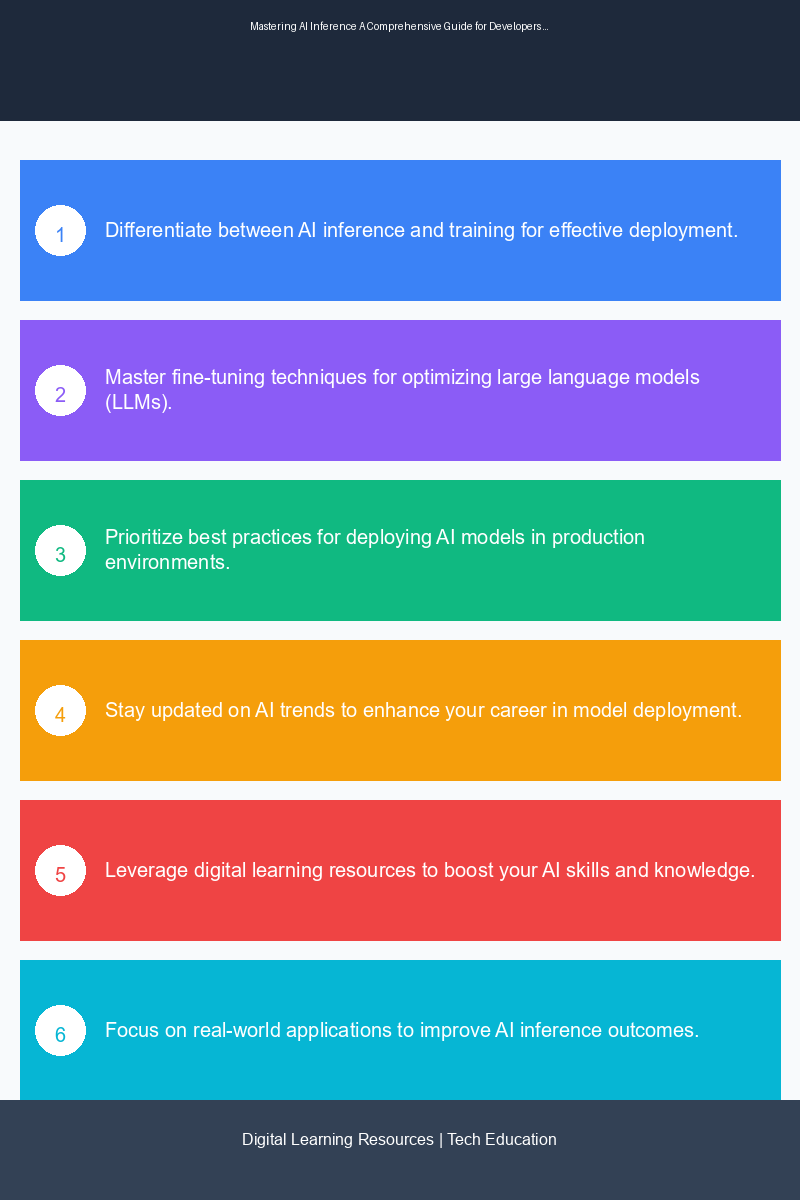

📊 Key Learning Points Infographic

Visual summary of key concepts and actionable insights

Leonardo.Ai API Mastery: Python Automation Guide (PDF + Code + HTML

Leonardo.Ai API Mastery: Python Automation Guide (PDF + Code + HTML

100 Python Projects eBook: Learn Coding (PDF Download)

100 Python Projects eBook: Learn Coding (PDF Download)

HSPT Vocabulary Flashcards: 1300+ Printable Study Cards + ANKI (PDF)

HSPT Vocabulary Flashcards: 1300+ Printable Study Cards + ANKI (PDF)

Fine-tuning large language models (LLMs) is one area where significant advancements have been made. Techniques such as low-rank adaptation and reinforcement learning are being employed to enhance the performance of LLMs in specific applications. With the advent of rapid prototyping, developers can now leverage a single application programming interface (API) call to deploy and test these models efficiently.

Practical Applications and Use Cases

As AI inference continues to gain traction, its applications span numerous industries:

- Healthcare: AI models can analyze medical images or patient data to assist in diagnostics and treatment plans.

- Finance: Inference models can predict stock market trends and assess risks based on real-time data analysis.

- Retail: Personalized recommendations can be generated through AI inference to enhance customer experiences and drive sales.

- Autonomous Vehicles: AI inference is crucial for real-time decision-making in self-driving technology.

- Natural Language Processing: Chatbots and virtual assistants utilize LLMs for effective customer interaction.

Learning Path Recommendations

For developers and IT professionals aiming to specialize in AI inference, consider the following learning opportunities:

- AI Inference Fundamentals: Start with courses that cover the basics of AI inference, focusing on its applications and impact in various industries.

- Fine-Tuning Techniques: Enroll in specialized training that focuses on low-rank adaptation and reinforcement learning for LLMs.

- Model Deployment Training: Gain hands-on experience with tools and platforms such as Fireworks AI and cloud-based infrastructures tailored for AI workloads.

- Programming and API Integration: Enhance your skills in programming languages like Python and learn API integration to facilitate rapid prototyping.

Industry Impact and Career Implications

The shift in focus from training to inference is creating numerous career opportunities. Professionals with expertise in deploying AI models are in high demand, particularly in industries that leverage AI for competitive advantage. The growing investment in AI inference platforms and specialized hardware—such as GPUs and AI accelerators—further underscores this trend.

As organizations seek to harness the power of AI, they require skilled individuals who can integrate these technologies into existing systems. Acquiring skills in AI model deployment, inference, and the associated tools will position you favorably in the job market.

Implementation Tips and Best Practices

To successfully implement AI inference in your projects, consider the following best practices:

- Start Small: Begin with simpler models and gradually scale up to more complex ones. This will help you understand the nuances of inference without becoming overwhelmed.

- Monitor Performance: Continuously evaluate the performance of your deployed models. Use metrics to gauge accuracy and adjust as necessary.

- Utilize Cloud Infrastructure: Leverage cloud-based solutions designed for AI workloads. This allows for scalability and reduces the need for extensive on-premise resources.

- Embrace Open Source: Utilize frameworks like PyTorch for developing and fine-tuning AI models. Open-source tools often have robust community support and resources.

- Stay Updated: The AI field evolves rapidly. Engage in continuous learning through webinars, online courses, and industry conferences to stay abreast of the latest developments.

Future Trends and Skill Requirements

As the AI industry evolves, certain trends are likely to shape the future of AI inference:

- Increased Specialization: There will be a growing need for specialized skills in AI inference, particularly as new tools and technologies emerge.

- Edge Computing: As IoT devices proliferate, AI inference will increasingly be performed on the edge, necessitating a different set of skills and knowledge.

- Ethical Considerations: Understanding the ethical implications of AI will become essential as its applications expand, particularly in sensitive areas like healthcare and finance.

To prepare for these future trends, professionals should focus on enhancing their skills in AI deployment, understanding new architectures, and getting acquainted with ethical AI practices.

Conclusion with Actionable Next Steps

AI inference presents an exciting frontier for developers and IT professionals. By understanding the critical concepts, practical applications, and industry trends discussed in this guide, you can better position yourself for success in this evolving field.

Actionable Next Steps:

- Identify and enroll in relevant online courses to enhance your understanding of AI inference and model deployment.

- Experiment with tools like the Fireworks AI platform and PyTorch to gain hands-on experience.

- Network with professionals in the AI field through forums, conferences, and social media to stay connected and informed.

- Set personal projects that involve deploying AI models, which will help you solidify your skills and build a portfolio.

By taking these steps, you’ll be well on your way to mastering AI inference and advancing your career in the tech industry.

Disclaimer: The information in this article has been gathered from various reputed sources in the public domain. While we strive for accuracy, readers are advised to verify information independently and consult with professionals for specific technical implementations.

Ready to advance your tech career? Explore our digital learning resources including programming guides, certification prep materials, and productivity tools designed by industry experts.